Whether you have launched your product or you are starting to explore new ideas or features for a longstanding product, you are usually in a space of maximum uncertainty. You have many questions about what elements of the solution are working and why. In these instances, it is important to divide your solution, and acquisition and retention efforts, into what is known and what is unknown, to ensure ongoing improvement towards product-market fit. Rather than guess at the answer to these questions, we suggest that you exploit experiments. Experiments are a powerful tool for startups. They encourage teams to pause and test assumptions before barreling forward with a complete solution that might not work.

Experiments help startups articulate what hypotheses need to be proven and to approach them in a rigorous, cost-effective way. The team decides what questions need to be answered about how users experience the product, and then designs a low-fidelity model (or two) to share with users for observation and feedback. For instance, you could test customers’ perceived value of your product based on various taglines, or their willingness to buy and/or use your product depending on how the offer is articulated. You could also test the right time and place to encourage users to refer others. Such tests allow you to make informed decisions so that you invest your time and effort where it makes sense to grow the business. Experimentation is not just a tool, but a mindset. All team members need to buy into the method of experimentation.

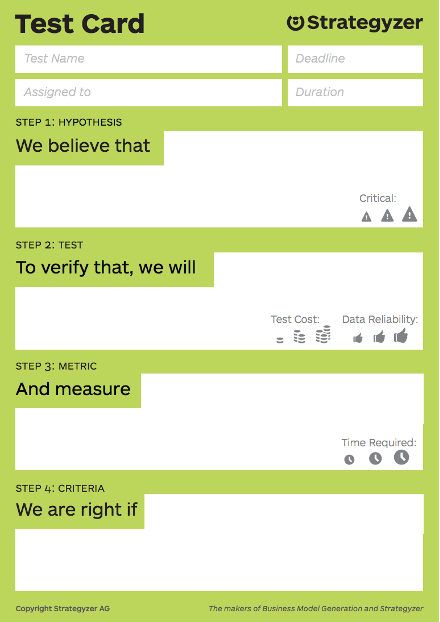

The first step is to identify your key hypotheses (our chapter on value propositions can help), and decide which ones to test. Next, decide which experiment method to deploy. A minimum viable product (MVP), for example, is a type of experiment that allows you to observe how potential users might respond to your initial, early-stage offering. It is important to identify your riskiest assumption/hypothesis (i.e., what users want, how the design should work, what messaging to use), and then find the easiest, fastest possible way to test those assumptions. Then, use the results of the experiment to correct the course and further progress to product-market fit.

The list below can help you decide what type of experiments to conduct, and how to create a framework to collect valuable results. Costs vary depending on the type of experiment you select.

You can start testing and measuring your solution as soon as it’s launched, and provided you’re testing just one variable at a time (to ensure you can attribute any differences in results to your experiment), this process can occur on an ongoing basis. This helps ensure continual product improvement.

We believe you should make experimentation part of your startup culture. Instead of relying on assumptions, use experiments to generate data (see our chapter on data to learn how to collect and analyze it), to guide your decision-making when it comes to understanding what to do, when and how.

That said, adopting an experimental mindset and embedding it into the company’s processes won’t be easy. It requires buy-in from the entire team, and realization that your great ideas are just hypotheses that can be validated (or, more likely, invalidated). Experimentation requires a lot of rigor, and time to properly design the experiments, run them, and extract and document learnings over time.

Designing lean experiments requires no additional expertise beyond that which is already on your team. Your CTO, product person or anyone on your tech team who has basic data analysis skills can get cracking. If no one in your team can do this, it indicates an opportunity for someone on your team to upskill.

Experiments are most often used to test either marketing or product concepts. For example: marketing experiments help ensure that your messaging resonates with customers and that you’re using the right channels. It can also aid in quantifying the expected conversion rates against cost, while product experiments will help you confirm that customers will see value in your proposed additions or changes, and that they understand how to use your product.

Such experiments can either be qualitative or quantitative. For instance, qualitative marketing tests could include showing customers your marketing materials and asking for feedback re: what is appealing to them and why. For quantitative marketing tests, such as A/B testing, you could test two marketing campaigns targeted toward the same users, to see which one converts customers more reliably using statistical tests as a guide.

Here are some common steps you can take to ensure your experiments are well-designed:

A quantitative experiment in practice: A/B testingExperiments can take various forms, but the most common type of experimentation is A/B testing, which observes customer behaviour in response to different features, messages or stimuli. A/B tests are randomized experiments with two variants (A & B), representing the control and treatment groups. Ideally, you would run an A/B test for each improvement you want to implement.

|

When deciding how to execute your experiment, your approach will depend on factors like the level of detail you need, the resources you have available, how far along in your product development process you are, etc. Approaches will vary based on how closely your test represents the real product (known as fidelity), and how much the customer can interact with the MVP relative to a live product (interactivity). A few product experiments approaches, in order from low to high fidelity/interactivity, include:

Once you have an MVP, take a structured approach to testing it with users. We suggest the following:

Simple experiments helped the Cowrywise team understand what marketing strategies could be leveraged to improve referral rates. The experiments gave them clear, reliable answers within a few short weeks, and indicated what strategies to pursue in the longer term.

Well designed experiments can further strengthen the evidence you get, which will increase your confidence in making decisions.

A Beginner’s Guide to A/B Testing

In this guide, Just Eat Senior Product Designer Kein Stone discusses what A/B is, why you should do it, and its limitations.

Optimizely is a digital experience platform, empowering teams to deliver optimized experiences across all digital touchpoints.

VWO is the market-leading A/B testing tool that fast-growing companies use for experimentation & conversion rate optimization.

Convert is another A/B testing tool. It enables you to onvert more visitors, plug revenue leaks, and save on testing tool costs with Convert Experiences.

Google Optimize is a free website optimization tool that helps online marketers and webmasters increase visitor conversion rates and overall visitor satisfaction by continually testing different combinations of website content.

Amazon A/B Testing service is an effective tool to increase user engagement and monetization. It allows you to set up two in-app experiences.

Modesty is a simple and scalable split testing and event tracking framework.

This simple calculator that tells you each variations conversion rate to help you determine whether A was better than B or vice versa.

Calculate how long you should run an A/B test

Put in a few metrics and this tool tells you for how many days you should run your experiment for.

A Massive Social Experiment On You Is Under Way, And You Will Love It

This article explains the benefits of A/B testing, with real-world examples – it should hit home.